Exposure to AI models may improve clinical efficiency and care

Summary:

Clinical users who observed an AI model were able to predict results and reduce invasive scans for children with hydronephrosis.

What if just observing Artificial Intelligence (AI) could make a clinician more efficient at their job and improve care outcomes for patients?

After repeated use of an AI model, a nurse practitioner at The Hospital for Sick Children (SickKids) was able to analyze ultrasounds more efficiently, ultimately reducing the need for invasive scans and illuminating a previously undescribed impact of AI in clinical settings.

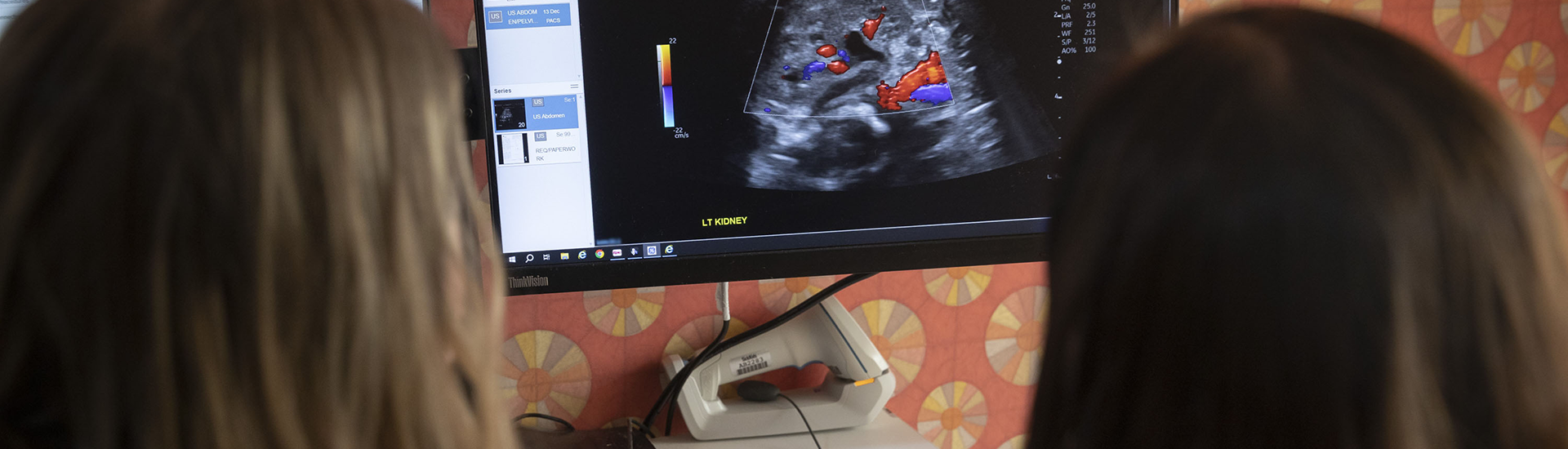

Pictured above: Mandy Rickard (left) and Lauren Erdman (right) look at a kidney ultrasound image on a computer screen.

Mandy Rickard, Nurse Practitioner in the Urology Clinic at SickKids, performs and analyzes kidney ultrasounds almost daily as lead of the pre- and postnatal hydronephrosis clinic. In the clinic, she cares for up to 100 children a month with hydronephrosis, a condition caused by a urinary tract blockage that can result in kidney obstruction.

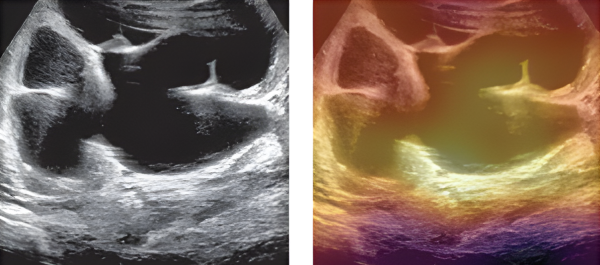

While analyzing the ultrasounds, Rickard looks at the degree of hydronephrosis and other characteristics that may suggest an obstruction that needs to be operated on. She also oversees an AI model developed to predict the severity of hydronephrosis cases and help providers identify appropriate care pathways. Over time, Rickard found that she was able to anticipate the AI model’s behaviour and intuit how it would categorize an ultrasound.

In new research published in the NEJM AI, a peer-reviewed journal from the publishers of the New England Journal of Medicine, the research team noted that Rickard's improved ability to interpret kidney ultrasounds was a direct result of her repeated exposure to the AI model. Over a three year period, Rickard reduced the number of children with hydronephrosis sent for invasive nuclear scans from 80 to 58 per cent.

When the model trains the user

The AI model, created by Lauren Erdman, a former PhD student at SickKids, was in a silent trial phase when Rickard began to predict the model’s outcomes. During a silent trial, researchers use prospective patients and data to observe the function of a model without informing care decisions. Even in this early stage, and without any other changes to her practice, Rickard was already predicting the model’s outcomes – but why?

The research team classified Rickard’s developed intuition as a new type of bias: induced belief revision.

What is induced belief revision?

Data-induced belief revision occurs when clinical end-users, like Rickard, develop an unconscious intuition based on collection and labelling of data.

Model-induced belief revision arises from repeated exposures to an AI model’s inputs and outputs, resulting in clinicians anticipating model predictions based on unseen data.

With induced belief revision, clinical behavourial change can happen even when AI is not in use. While this type of bias proved beneficial to both care providers like Rickard and patients with hydronephrosis, the team is cognizant that the bias may also unintentionally influence clinical practice in less positive ways if not properly observed and documented.

“This model is meant to augment, not to replace us as experts,” Rickard says.

To help address this bias, Rickard’s team suggests minimizing a clinical user’s chance of being influenced by a model, especially when one person provides the bulk of critical care. Doing so could help maintain the important distinction between true clinical judgement and a model’s predictions.

AI research at SickKids

AI has the power to improve patient experience and reduce provider-fatigue when used responsibly. At SickKids, using AI in an ethical manner is possible due largely in part to a framework developed by bioethicist Dr. Melissa McCradden.

The framework established the silent trial to clinical trial pipeline and led to the development of an AI Regulatory Advisement Board that helps research move from ideation to clinical trial in a smooth process.

“At SickKids, we’re actively shaping guidelines for the responsible development and safe implementation of AI in health care,” says Jethro Kwong, a Urology resident at SickKids and first author on the paper.

With the success of the model, the team is moving to clinical trial and implementing the model in a patient-first way by prioritizing informed consent.

“Our ultimate goal is to put this tool into communities where there may not be a urology expert, to preserve hospital resources and potentially save families from taking repeated, sometimes lengthy trips to the hospital,” Rickard says. “By embedding our model into care, we can hopefully reduce the number of low-risk hydronephrosis cases that escalate to a renal scan, ultimately streamlining testing and surgery for those who it would benefit.”

More AI news at SickKids:

Does AI really know best? Exploring the role of AI in clinical care

Hear from two SickKids researchers who are leading the discussion around ethical and care implications of using artificial intelligence in medical decision making.

Youth voices should inform new future for the use of AI in medicine

A research team heard from 28 young people about their views on integrating artificial intelligence into patient care.

How SickKids researchers are using AI to reimagine the future of paediatric care

From abnormal heart rhythms to hydronephrosis, SickKids research teams are using artificial intelligence to power automation, prediction and earlier detection of diseases.